The Myth of AI Leisure: Why Judgment Is the Final Frontier

As AI scales execution, humans scale consequence. You won’t work less. You’ll matter more.

We’ve always told ourselves the same lie: that the next technology will finally set us free.

Spreadsheets would eliminate accounting drudgery. Email would make communication seamless. Now, AI promises leisure. Agents will do the work. You’ll sip coffee. The business will run itself.

But that’s not what’s happening.

Work hasn’t disappeared. It’s shifted, and the stakes are higher than ever.AI Doesn’t Reduce Work. It Refactors It.

In the AI-native enterprise, you don’t perform the task. You authorize it. You shape it. You absorb the consequences of its outcome.

The number of decisions you make goes down. But the weight of each one goes up. Instead of acting constantly, you act precisely - when judgment, ethics, and trust are on the line. And that judgment becomes the most valuable, and irreducible asset in the entire system.

What We Mean by Judgment

In this series, “judgment” isn’t just subject-matter expertise or quick intuition. Those can often be learned by humans or machines through repetition, training, and data.

Judgment, as we define it here, is the uniquely human capacity to integrate context, values, consequences, and narrative into a decision, and to be accountable for it.

It’s not just knowing the right answer. It’s knowing when to act, when to wait, and when to change the question entirely. Judgment is where:

Facts meet values

Context shapes meaning

Choices carry consequence beyond the immediate outcome

It’s the decision-making layer that AI can inform, but not embody.

The Evolution of Human-in-the-Loop

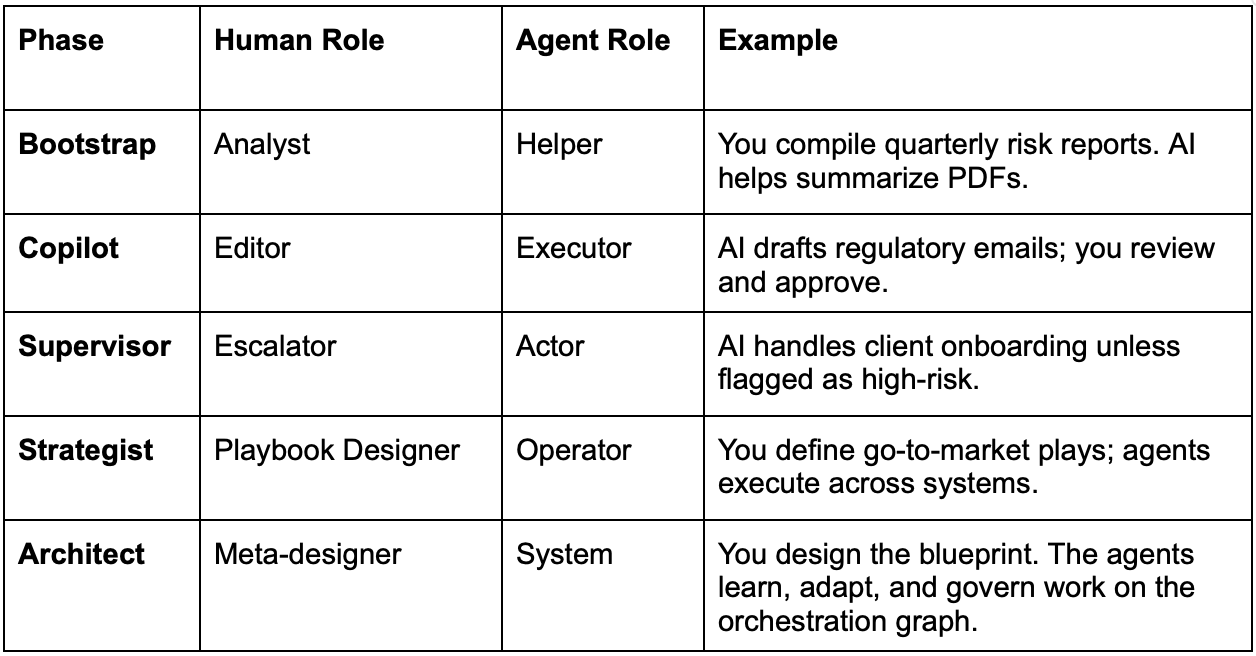

“Human in the loop” sounds like oversight. But it’s actually orchestration, and like any good system, it evolves.

You design the blueprint. The agents learn, adapt, and govern work on the orchestration graph.

This isn’t a slow fade from relevance. It’s a climb. You move from execution to judgment to architecture. Your influence compounds with every step.

Humans Don’t Disappear. They Become the Circuit Breaker.

As agents get smarter, the bar for human intervention rises.

You’re not there to approve drafts. You’re not there to babysit workflows. You’re there for the decisions the system can’t afford to get wrong.

When ambiguity overrides automation

When judgment outranks optimization

When failure means consequence, not just error

In that moment, the orchestration graph routes to you.

You're not the line worker anymore. You're the circuit breaker—the trusted safeguard, the intelligent escalation point, the final authority before impact.

Judgment Is the Final Scarcity

Execution is cheap. Inference is infinite. Models scale.

But judgment does not.

Judgment is contextual. It's political. It's narrative. It's human.

And in a world where every process can be automated, the competitive edge becomes knowing when and what not to automate, when to insert yourself, override the system, or say, “Not this one.”

That isn’t just judgment. That’s agency.

This Is a Shift in Power, Not Productivity

This isn’t just about work changing. This is about who holds institutional power in a world where AI runs the firm. In the past, power concentrated where labor concentrated. Now, power concentrates where judgment concentrates.

That means:

Leaders need to design systems that escalate intelligently

Organizations need to define who gets to decide, not just what gets done

Humans become the governors of values, not just tools

The AI-native enterprise won’t be human-free. It will be human-dense, with decision-making concentrated in the moments that matter most. And those who are trusted to decide will run the company.

AI didn’t replace you. It repositioned you.

The only question now is: When the system routes to you, will you be ready to choose?

This is the first in a series exploring how AI changes not only how we work, but also how decisions are made. Part II: “Where Judgment Concentrates” drops next week. Thanks to Mike Donahue for brainstorming - this piece and the next ones.

Brilliantly written piece.

For the past decade or so, raising ambiguity and uncertainty was a centerpiece of pretty much all business literature (at some point, when playing buzzword bingo, VUCA would be a quick target).

The nature of LLMs is that they provide the most likely answer (yes, I'm oversimplifying here, but to a large degree that's what these models do). What follows is that when the most likely answer is good enough, AI is pure gold.

However, under conditions of high uncertainty, the most likely answer would be almost equally likely as, well, any other answer. Ultimately, it's all uncertain. It's all ambiguous. *By definition*, we can't have a good enough answer up front.

That's where judgment kicks in. We pull all sorts of distant contexts that aren't obvious. We trust our subconscious impulses that we can't easily explain. Now, if an AI model had access to all that, it might have even produced a similar outcome. But it doesn't. And it can't.

Heck, we can't explain why our judgment would be to choose this over that.

As cognitive science teaches, we make decisions first and then find justification for these decisions. That part won't be easily outsourced to the machine, as there's no explicit training data.

That is, not unless AI develops actual world models and reasoning.