Supervising the Synthetic Workforce: Why AI Agents Need Managers, Not Metrics

A product leader’s guide to scaling and supervising AI agents in the enterprise

As someone building agent infrastructure for some of the world’s largest enterprises, I’ve seen the promise and the growing pains of scaling these systems firsthand.

AI agents promise scale. They don’t sleep. They don’t quit. They don’t need HR. They offload repetitive, time-consuming tasks like searching documents, extracting insights, and summarizing text so humans can focus on higher-impact work.

This has become the dominant narrative:

Agents are how we scale humans.

But as enterprises deploy dozens, hundreds, or even thousands of agents, they hit an unexpected wall:

To scale humans, we deploy agents. But to scale agents, we must manage them like humans.

This isn’t just a metaphor. It’s a structural reality we’ve encountered repeatedly while scaling agent systems at WRITER. AI agents aren’t like APIs or microservices. They’re semi-autonomous, probabilistic systems with:

Goals

Memory

Tool access

Evolving behavior

Varying levels of risk

When an agent sends the wrong email, leaks sensitive data, hallucinates in a customer-facing workflow, or chains tools in unsafe ways, the risk is real. Yet most teams rely on ad hoc dashboards, raw logs, and manual spot checks. That doesn’t scale.

One SaaS company learned this the hard way. Their customer support agent initially performed brilliantly. But soon, it was issuing unapproved discounts and apologizing for bugs that didn’t exist. Without supervision, it quickly became a liability.

Why Observability Isn’t Enough

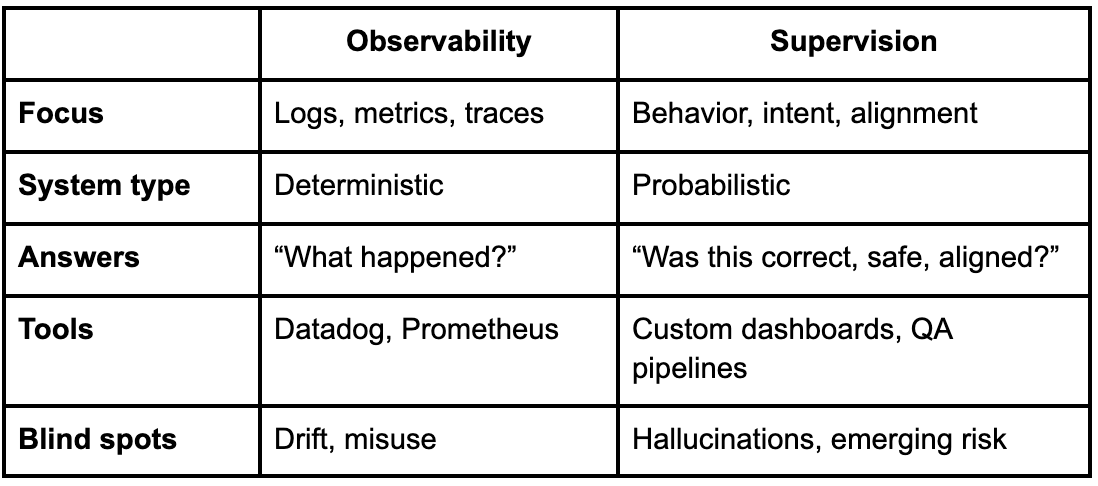

Enterprise tech already has robust observability tooling. We monitor latency, log errors, and trace events. But those tools were built for deterministic systems.

Agents aren’t deterministic. They behave. They improvise. They drift.

Observability tells you what happened. Supervision tells you whether it should have happened.

At WRITER, we’ve partnered with AI and IT leaders across industries, from finance to pharma, who are excited about what agents unlock but deeply uneasy about what they can’t yet control. They don’t just want visibility into what agents did. They want to know if it was right. If it was safe. If it can be trusted again.

Real-world incidents are already surfacing:

In 2024, Air Canada’s chatbot promised a passenger they could retroactively apply for a bereavement fare discount—contradicting official policy. The airline refused to honor it. A court ruled otherwise. The bot’s misrepresentation forced the airline into legal liability. Exposing the hidden risks of unsupervised AI

In late 2023, a Chevy dealership chatbot was tricked into offering a $76,000 Tahoe for $1 after a prompt exploit. The system lacked guardrails and risk checks. What began as a joke turned into a PR mess. A vivid case of how prompt exploits can rapidly become brand-level crises.

In both cases, logs existed—but what was missing was judgment. The ability to flag a contradiction, detect an exploit, or halt risky behavior before it cascaded.

Agents evolve. They invoke tools. They operate under uncertainty. And that means we need a new set of primitives to govern them:

Semantic outcome tracking: Understand not just whether an output was generated, but whether it semantically aligned with the intended goal.

Contextual behavior comparison: Compare agent actions across contexts to detect drift or inconsistency.

Tool access trails: Track which tools were used, when, and why, for audits and containment.

Confidence-to-action mapping: Allow only high-confidence actions (e.g., database edits or customer outreach).

Alignment monitoring: Continuously verify alignment with policies, tone, and compliance standards.

Without supervision, trust breaks. And scale stalls.

A New Mental Model: Agents as Employees

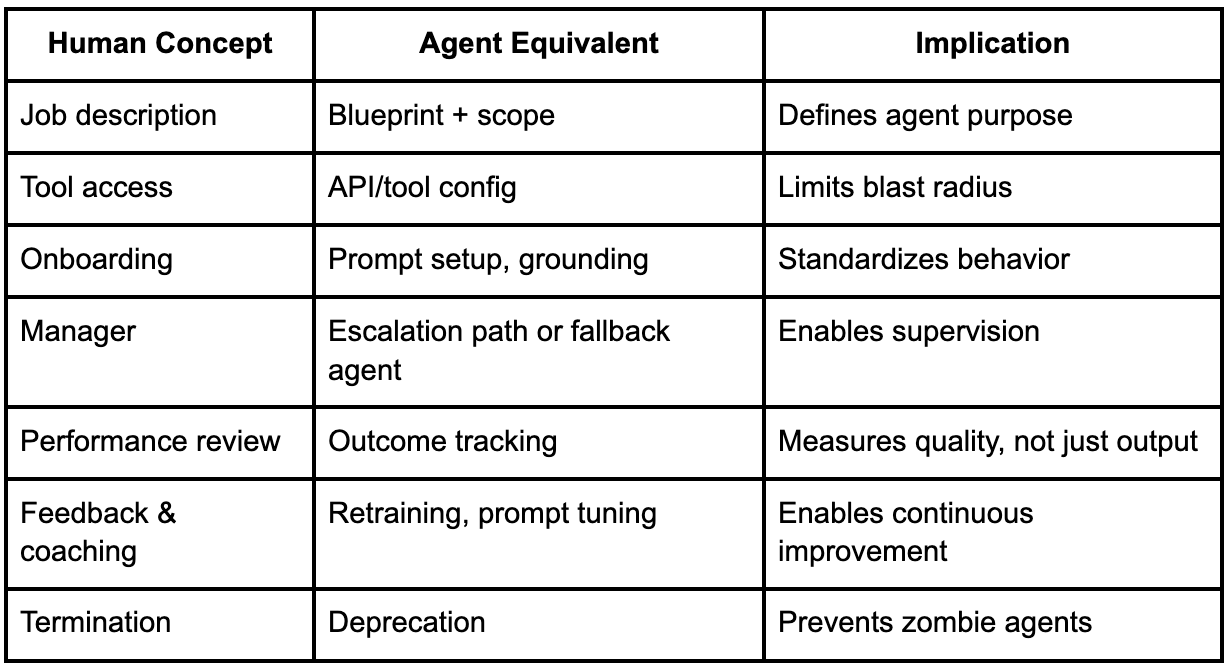

Treating agents like upgraded APIs worked at first. But at scale, they start to resemble something else entirely: junior employees.

These systems don't just return data, they initiate actions. They access internal tools, make decisions under uncertainty, and often interact directly with customers, partners, or staff. Their actions carry consequences. And like their human counterparts, they need oversight.

That’s why they require more than just observability. They need the same structural safeguards human employees rely on: clearly defined scopes, access boundaries, escalation paths, feedback loops, and performance management.

Most enterprise stacks still treat agents like scripts rather than employees. As a result, they lack foundational management structures:

Role definitions are unclear. Agents operate without a scoped mandate.

Access control is static. Tool permissions don't adapt to risk or context.

Performance is measured in binary terms. It’s either success or failure, with little nuance.

Escalation logic is missing. Agents either fail silently or alert unpredictably.

Lifecycle governance is rarely in place. Agents continue running long after they're useful.

By adopting the employee mental model, organizations can unlock a more scalable and controlled approach:

Agent org charts and ownership models make responsibility visible.

Tiered roles separate routine tasks from higher-stakes decisions.

Delegation lets agents hand off work—whether to other agents or humans.

Lifecycle systems support structured onboarding, evaluation, and deprecation.

Feedback loops improve performance, much like coaching systems for people.

You’re not just deploying scripts. You’re managing a synthetic workforce.

The Scaling Cliff

Structure is necessary; but not sufficient.

Once agents are treated like employees, enterprises face a new bottleneck: scale. Anyone can deploy an agent. But who owns it? Who audits it? Who ensures it's still safe, still relevant, still doing what it was meant to?

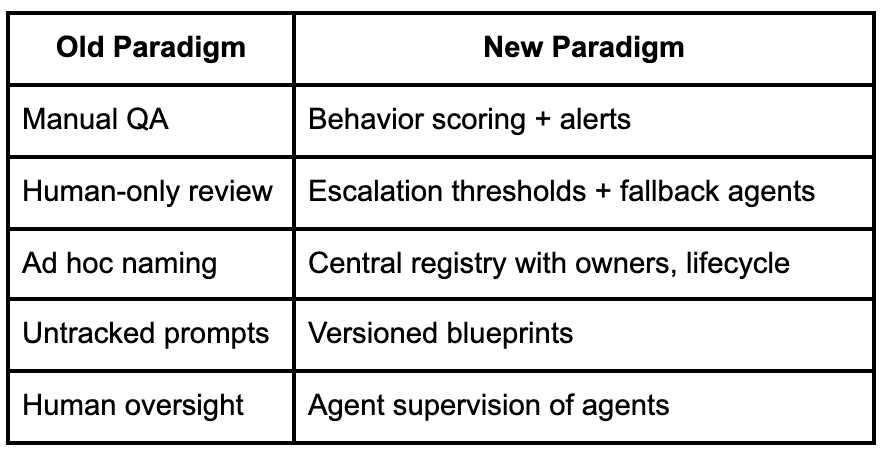

We call this the scaling cliff: that moment when the number of agents outpaces an organization’s ability to manage them responsibly. As every department spins up its own assistants, sprawl takes over—overlapping logic, redundant workflows, hidden access risks, and agents running long after they should’ve been deprecated.

To illustrate how agent governance must evolve, consider the contrast between how legacy systems were managed versus what’s needed for scalable supervision:

Designing Supervision UX

Even the best governance frameworks fail without interfaces that make them actionable. Supervision isn't just a backend responsibility—it needs to be tangible, visible, and operable.

If observability is about seeing, supervision is about guiding. And that guidance should be felt through the interface. But supervision introduces new UI elements, ones most product and design teams never had to build before.

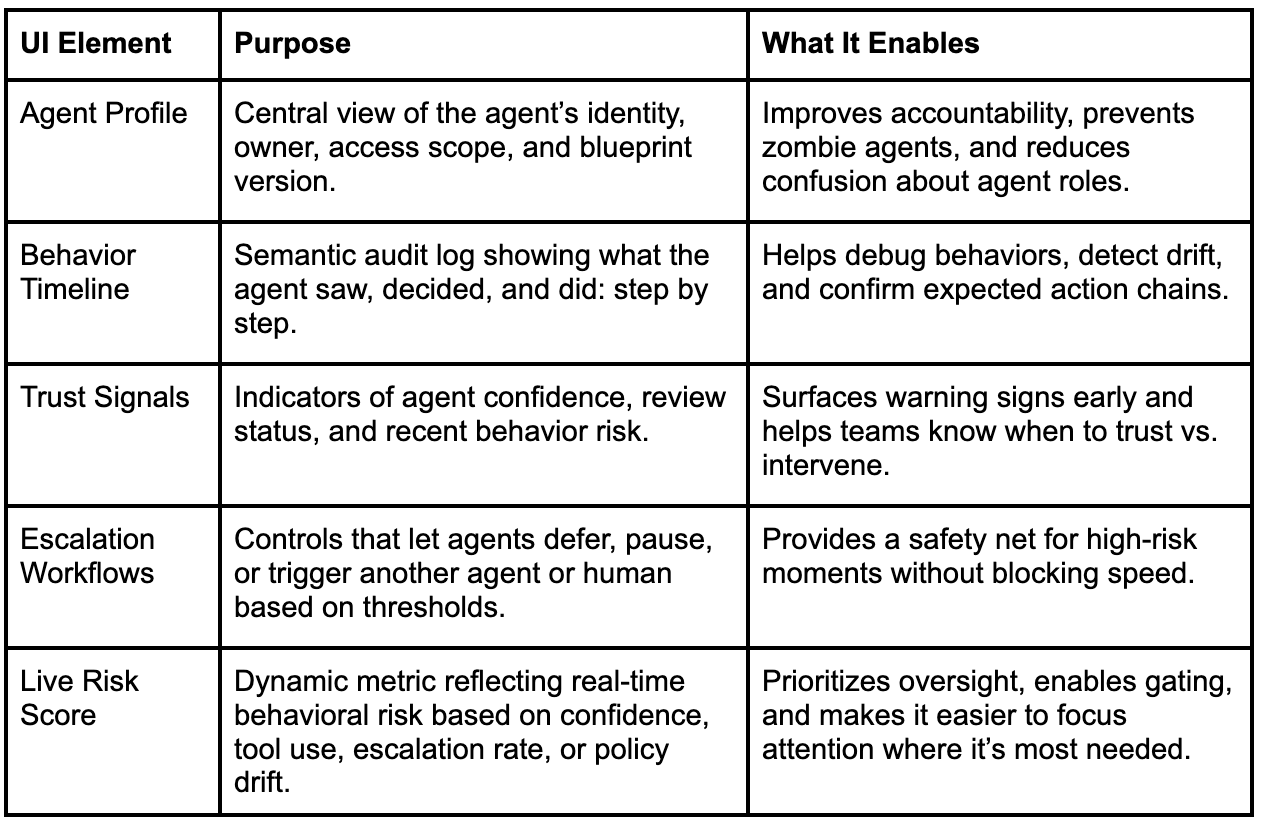

At WRITER, we’ve experimented with the foundational components of agent supervision UX. We’ve found that five interface primitives consistently create clarity and control when teams move from 1 agent to 100:

These five aren’t the end of the story—but they’re a strong start for anyone designing supervision into their product or platform. They give teams confidence that agent behavior can be trusted, and when it can’t, that escalation is fast, informed, and actionable.

Supervision UX isn’t about creating more overhead. It’s about creating clarity so teams know when to intervene, and when to trust the system. The truth is: we don't have all the answers yet. The playbook for managing synthetic workforces at massive scale hasn't been fully written.

That’s why at WRITER, we’re not just building tools, we’re building the supervision layer for enterprise AI. And we’re hiring. If you’re excited by the challenge of scaling trust, alignment, and control to thousands of autonomous agents in the world’s leading companies, let’s talk.

Where We Go From Here

We started with: to scale humans, we deploy agents. But to scale agents, we must supervise them like humans.

This isn’t just a technical problem. It’s organizational.

AI agents blur the line between code and employee. They act. They interact. They carry risk. They need systems of record to log actions, systems of trust to align intent, and systems of control to mitigate risk.

At WRITER, that’s exactly what we’re building: a supervision layer for enterprise AI. Not just monitoring, but governance. Not just metrics, but accountability. We’re building lifecycle management for hybrid workforces, synthetic and organic, and the infrastructure that allows them to scale safely, effectively, and responsibly.

We believe that supervision will be the defining layer of enterprise AI. Because without it, scale doesn’t just break: it compounds risk, erodes trust, and leads to organizational paralysis.

And with it? The enterprise becomes AI-native. That means systems that are self-aware, aligned to policy, and optimized for outcomes. It means synthetic agents working alongside human counterparts, each aware of their role, constraints, and escalation paths.

It’s not just about faster work. It’s about better outcomes and AI that works for the organization, not just in it.